ArtTalk is a project I developed with Andrew Stoddard and Maria Ascanio for the Intelligent Multimodal User Interfaces class at MIT. Our goal was to reimagine the way we interact with art in museums and galleries. With ArtTalk, visitors can point at a detail and share their comment aloud, creating a more interactive and engaging art viewing experience.

Motivations

Andrew and I had both taken the MIT class Extending the Museum. During the class, we visited lots of museums and noticed something. Visitors walked from one exhibit to another, silently admiring the art but hardly having a conversation about it. We wondered, "What if we could change that? What if we could make these gallery spaces more interactive?"

So, we designed ArtTalk. With ArtTalk, you don't have to keep your thoughts to yourself. A visitor simply points at a detail and shares their comment aloud. The application tracks your hand gesture and captures your voice, letting you pin your thoughts directly on the art piece.

There's no need for any extra gadgets - your voice and your gestures are the magic wands.

User Flow

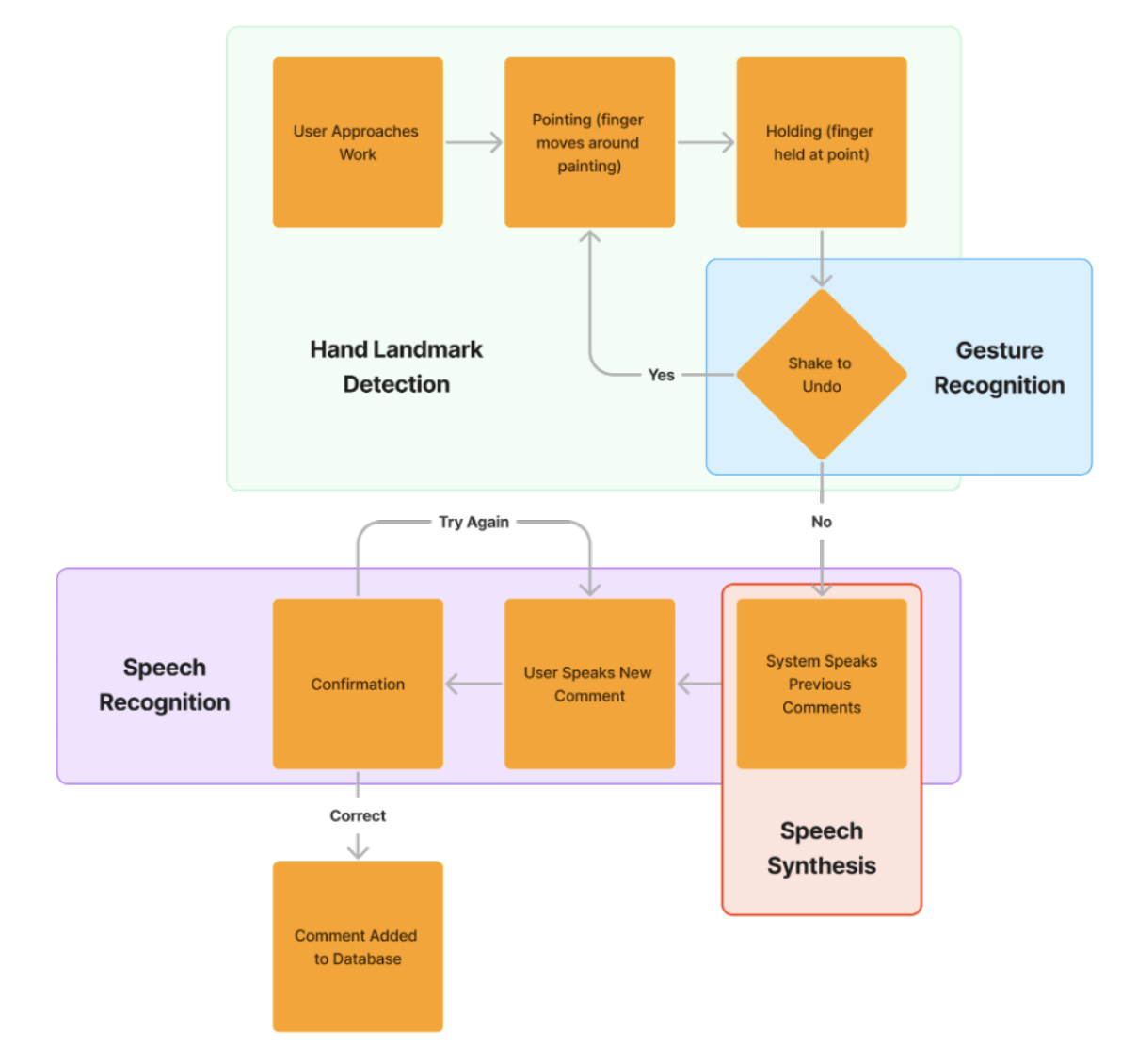

Below is an early diagram of our user journey. We wanted to make sure that the user flow had ample feedback from the system since no screen is involved. The feedback is primarily auditory, with the system reading aloud the user's comments and the comments of other visitors.

Approach

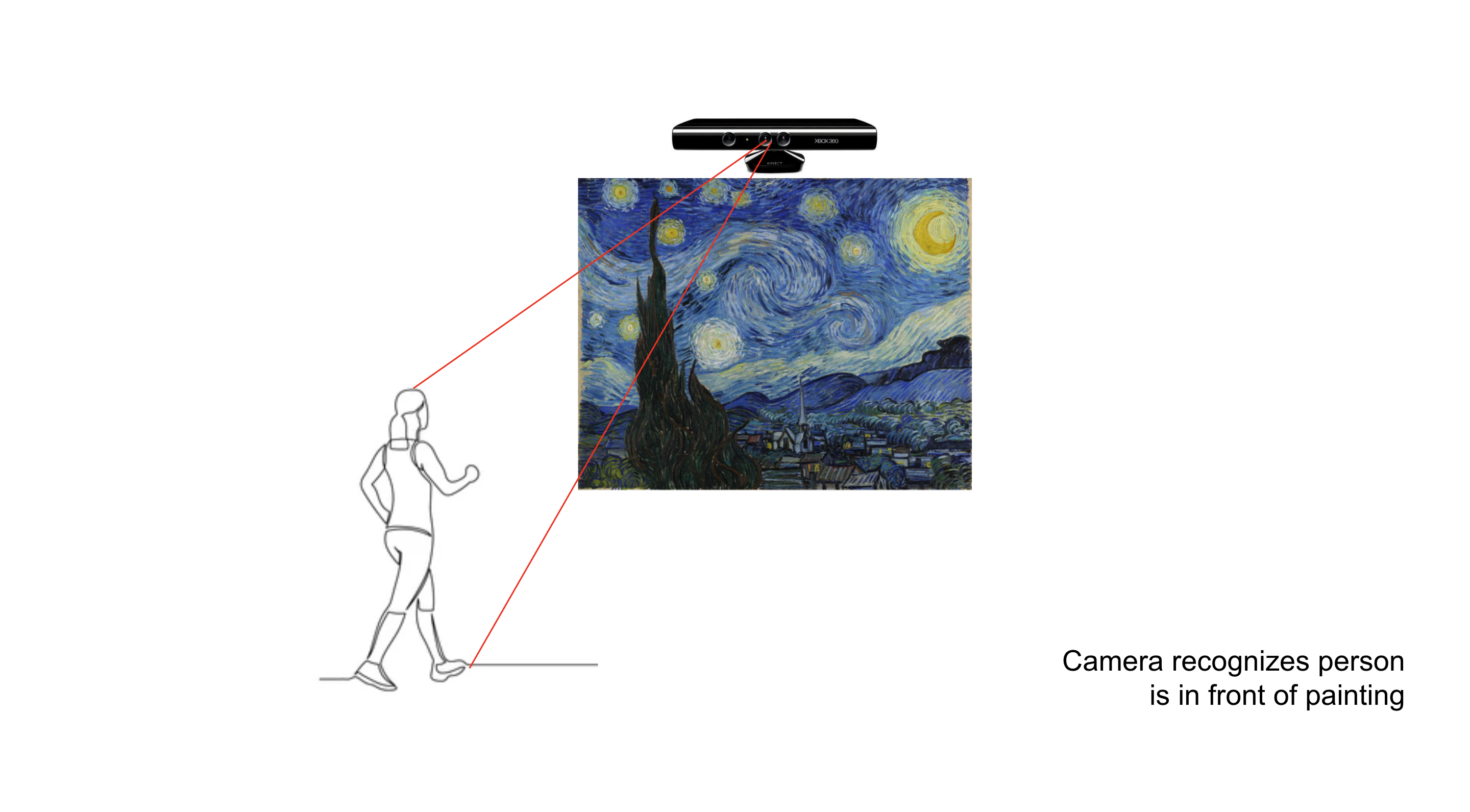

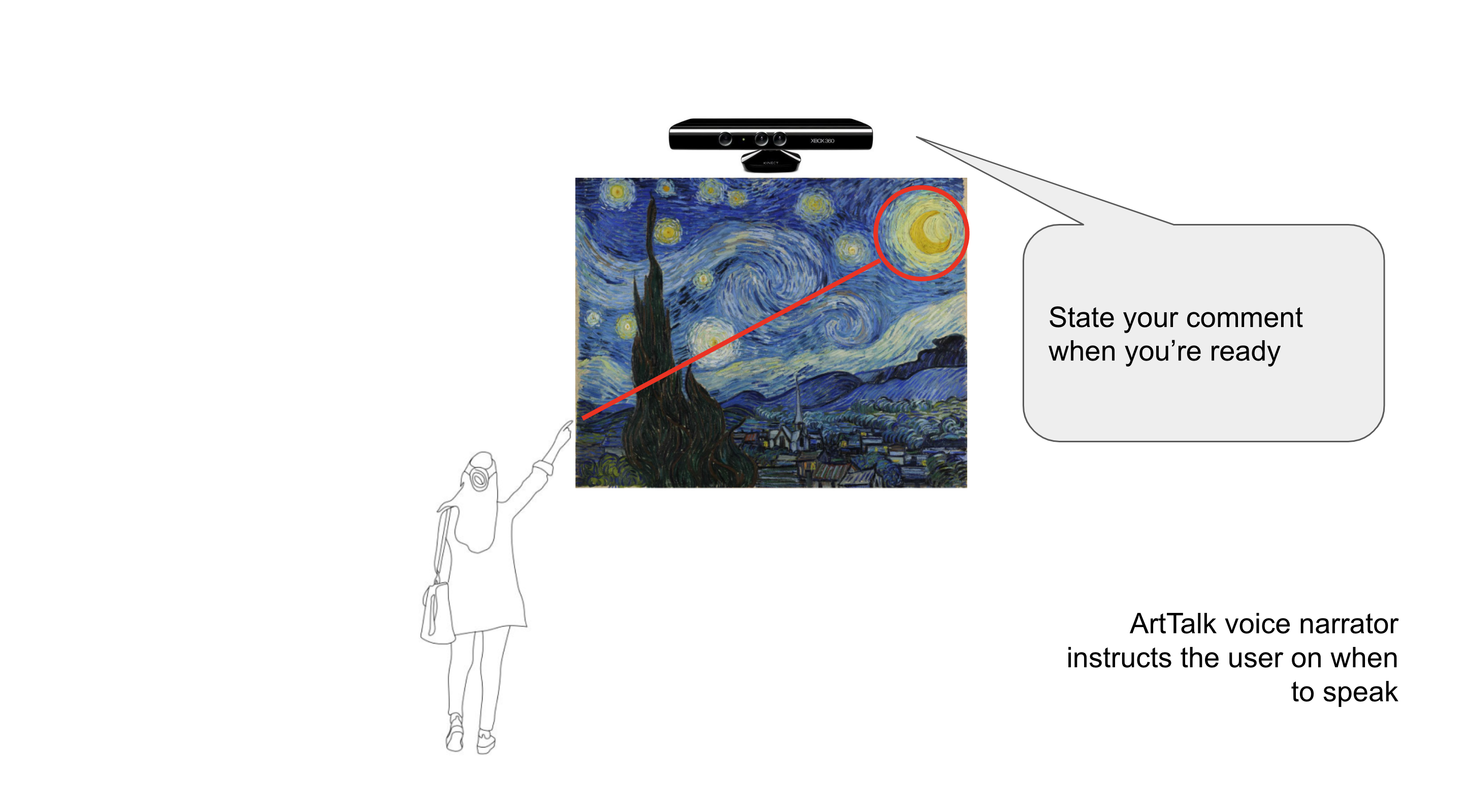

A user can walk up to an art piece and the ArtTalk system will register that they are starting a new interaction.

Engage

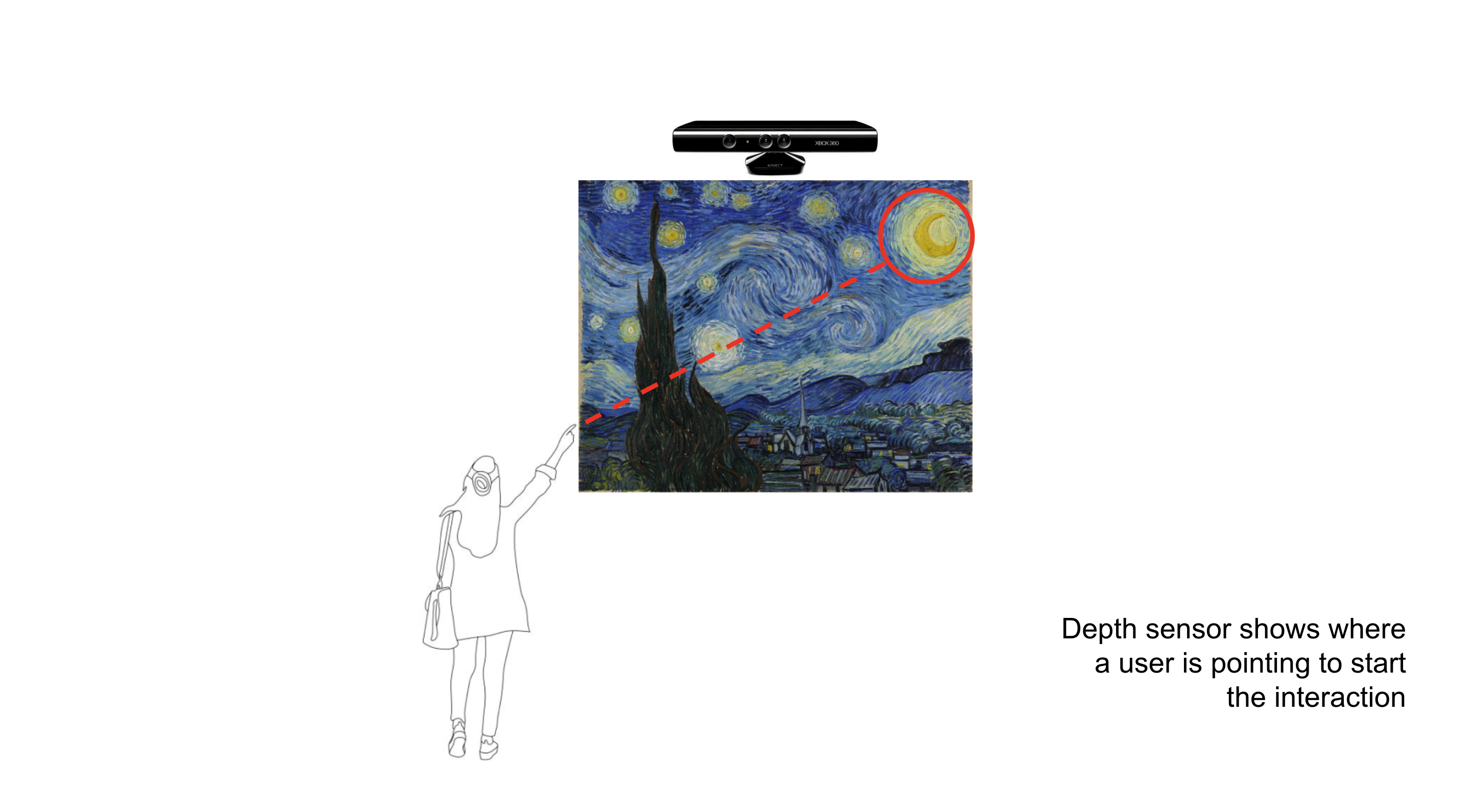

A user can engage with the system by pointing at different parts of the painting with their hand.

Past Comments

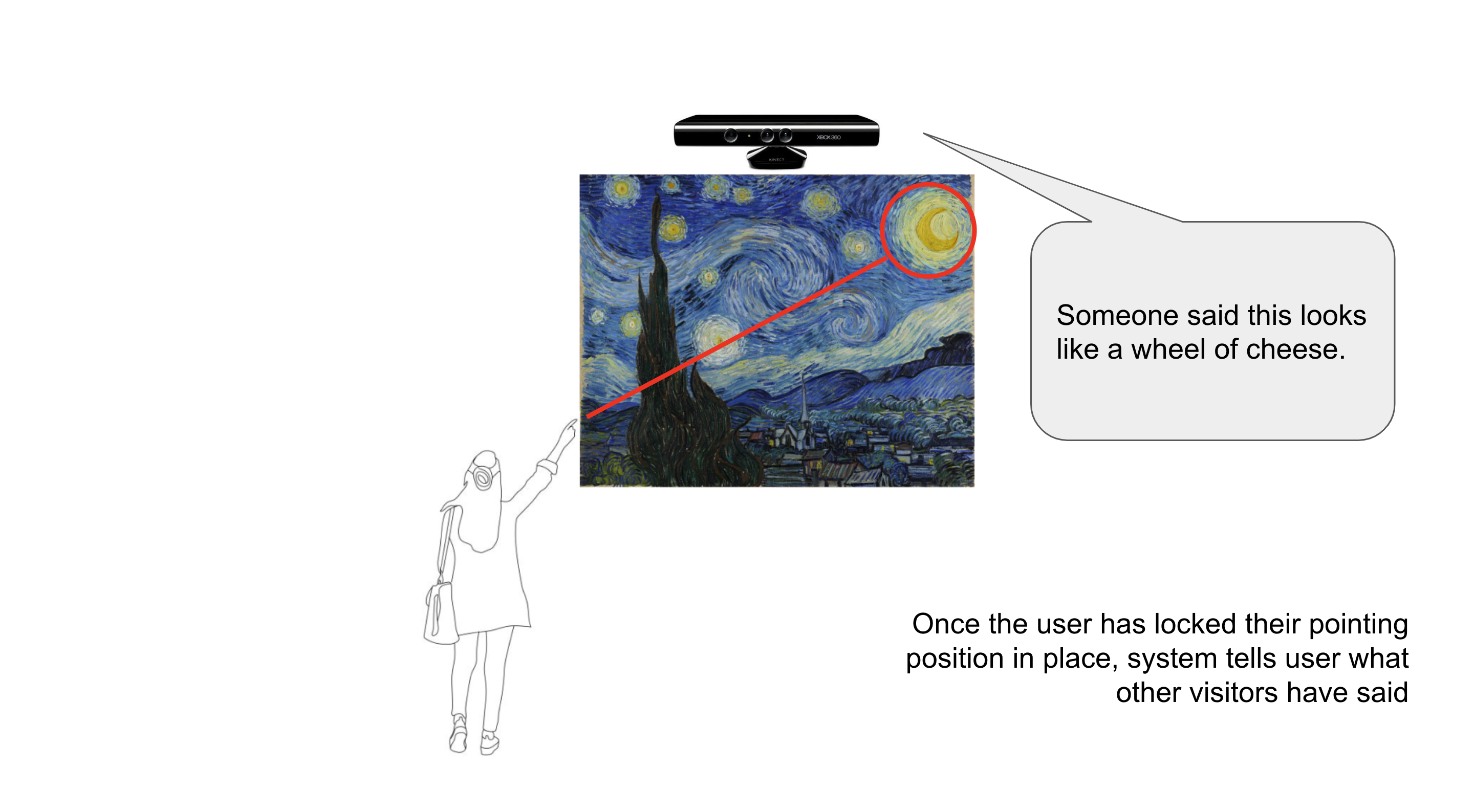

Once a user has locked their pointing position in place, the system will read aloud past comments from other visitors.

Prompt

ArtTalk then prompts the user to leave their own thoughts about the painting.

Comment

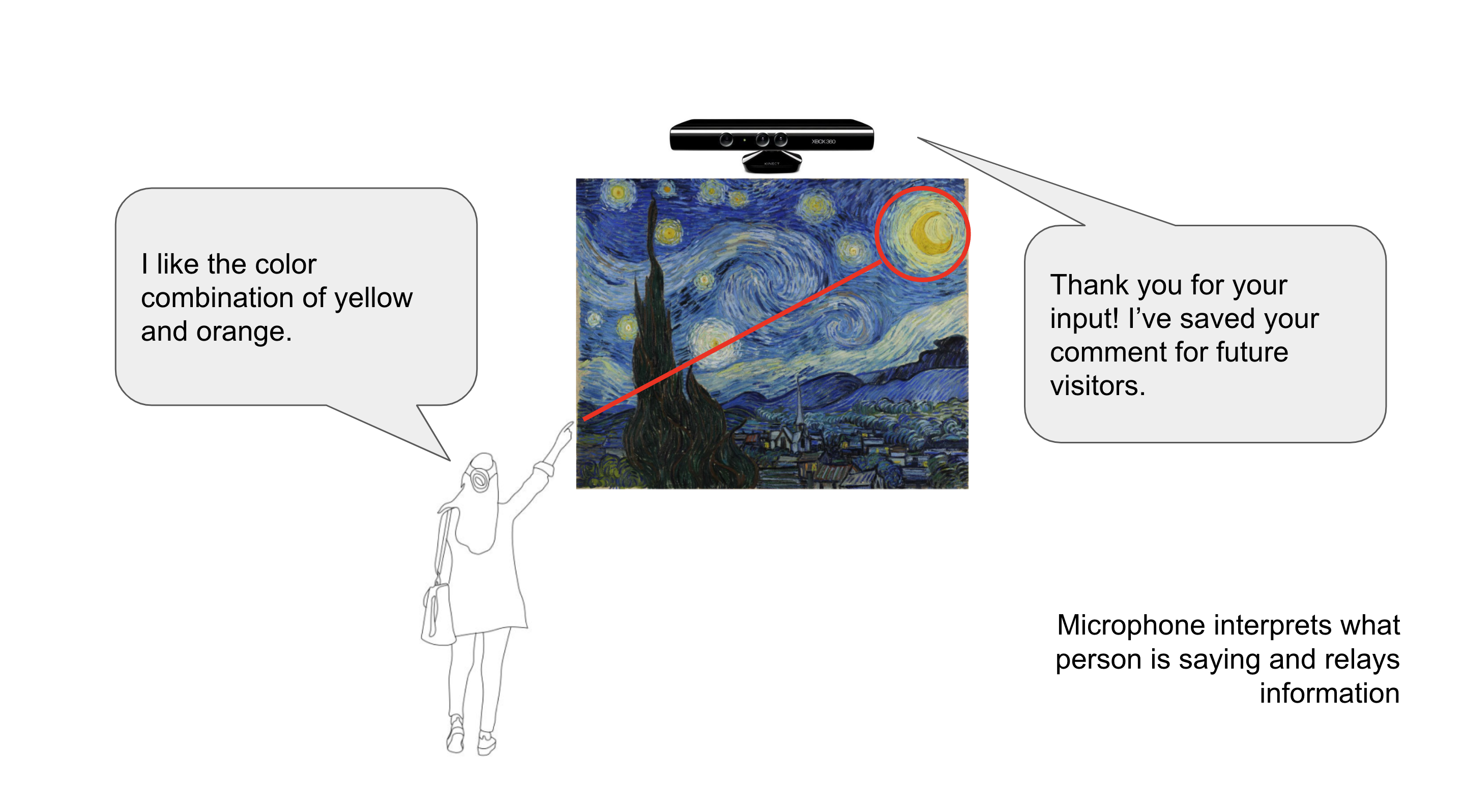

The user then speaks their comment aloud.

To wrap up the interaction, the system will read aloud the user's comment and confirm that their comment has been recorded.

System Diagram

The user flow should feel seamless and natural. To achieve this, we used a robust state machine to manage the user's interaction with the system.

Technical Details

Frontend: Next.js

Styling: Tailwind CSS

Database ORM: Prisma

Database: PostgreSQL

Hand Landmark Detection and Gesture Recognition: Google Mediapipe

Web Hosting: Vercel

Speech Recognition: Webkit Speech Recognition and Synthesis API

Sources

ArtTalk Github repository: https://github.com/trudypainter/arttalk

ArtTalk live demo: https://arttalk.vercel.app/

ArtTalk final paper: /arttalk/arttalk_paper.pdf